Let’s Talk

Improve the quality of your customer data today.

On average, a database contains 8-10% duplicate records. These duplicates result in waste and inefficiencies and cloud your ability to get a single, accurate view of the customer.

MatchUp is the most powerful and accurate matching and deduping solution on the market to combat the problem of duplicate records. What sets it apart from the rest is its intelligent parsing capability to understand and parse the various components of global addresses. By combining deep domain knowledge of international address formats and advanced fuzzy matching techniques, MatchUp gives you the ability to identify and merge/purge even the most difficult-to-spot duplicate records.

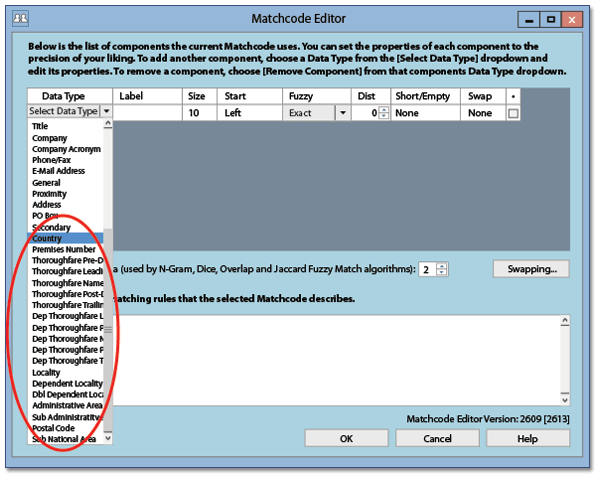

MatchUp employs a matchcode to determine if two records should be considered duplicates. MatchUp uses a predefined matchcode, or one that you have created using the Matchcode Editor.

The following matchcode components (data types) are available for use in identifying duplicates:

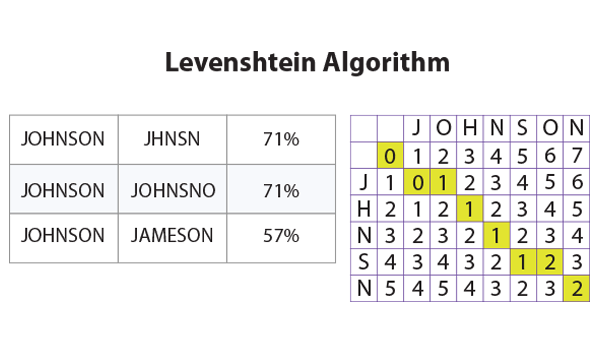

MatchUp combines Melissa’s deep domain knowledge of contact data with over 20 fuzzy matching algorithms to match similar records and quickly dedupe your database.

MatchUp employs the following fuzzy matching algorithms to identify “non-exact matching” duplicate records:

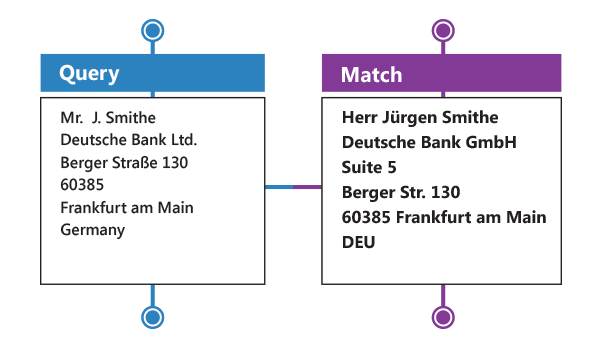

The World Edition of MatchUp supports 12 countries, including Canada, Germany, U.K., and Australia. MatchUp’s advanced deduping can see through diacritic equivalents to Latin characters and interpret keywords that are the same but spelled differently (i.e. Germany and DEU).

MatchUp has some unique attributes which can be employed to help identify duplicates in some interesting ways.

1. Survivorship for Golden Record Creation

Matchup can select the best elements from multiple records to survive consolidation, ideal for the creation of golden records for a single customer view. Available in Microsoft SQL Server Integration Services (SSIS) and Pentaho PDI.

2. Proximity Matching

MatchUp’s patented distance algorithm uses latitude-longitude coordinates and proximity thresholds to identify duplicate records that are geographically close together. For instance, using location attributes, MatchUp can detect matching records at different addresses (for example, a company with two different entrances) but within a specified distance to each other.

3. Householding

MatchUp can identify and consolidate records that are members of the same household to better understand customer relationships, lifecycle, and needs. You can also use MatchUp to bring together multiple business accounts into “corporate families” to build insight and better evaluate the total sales relationship. Householding can also be used to eliminate unnecessary multiple mailings to the same household to cut down on wasted print, production, and postage costs.

MatchUp offers three methods of operation (or ways to match records):

1. Read / Write Deduping

Compares records in one or more databases at once. Each unique group will have one record that receives an “output” status; the other matching records receive a “duplicate” status. Ideal for matching entire databases at one time.

2. Incremental Deduping

Enables real-time matching by comparing each record as it comes in (like from a web form or call centre) against the existing master database. If the incoming record is not a duplicate, it can be added.

3. Hybrid Deduping

Provides a combination of the first two methods with the flexibility to customise the process to match an incoming record against a small cluster of potential matches. With hybrid deduping you can store the match keys in a proprietary manner. Ideal for real-time data entry or batch processing of entire lists.

Melissa's Data Quality tools help organisations of all sizes verify and maintain data so they can effectively communicate with their customers via postal mail, email, and phone. Our additional data quality tools include

Data deduplication is the process of matching, merging and deduplicating the streams of customer data coming into a company’s systems from multiple channels and sources. Having more than one communication channel can cause pieces of the same information to be spread across different fields, records, and databases. This can cause one system or one entire record to be inaccurate or inconsistent, which impacts the single customer view.

Duplicate data is having multiple sets of data from the same individual. For example, Miss Elizabeth Tailor from 123 Albion Street, London has also been entered into the same database as Mrs Tailor from 123 Albion St, London. Ten percent of a business’s database will accumulate duplicate data in a year due to people moving, changing marital status, or changing email addresses and phone numbers, to name a few instances.

Duplicate data can also enter a company’s database from the multiple points of contact used by customers to communicate with the organisation. For example, a customer may give a different set of information to a call centre than they would online, leaving pertinent information either uncollected or in a different account not linked with their original one. This causes a collection of information that can be inconsistent, which then gets spread out across an organisation’s different departments, internally affecting the single customer view and “golden record” initiatives.

Contact data deduplication offers numerous benefits, including improved data accuracy, cost savings, enhanced productivity, better customer experiences, informed decision-making, compliance with regulations, and optimised database performance.

The University of Washington increased their revenue ten-fold with data quality.

Read NowImprove the quality of your customer data today.

Discover Melissa APIs, sample code & documentation.

Full-service data cleansing to clean, dedupe and enrich.

A free trial of our standout verification services.