What We Do

Powerful Data Quality Tools for Every Stage of Your Data Journey

Our flexible and customisable end-to-end data quality solutions:

- Profile & Monitor: Uncover where bad data enters your systems and maintain data quality standards over time.

- Cleanse & Standardise: Use advanced rules and machine learning to correct and standardise data for maximum accuracy.

- Verify & Enrich: Validate global addresses, names, phones, emails, and IPs, and enrich records with missing demographic details.

- Match & Consolidate: Identify and merge duplicate records using intelligent parsing and domain knowledge to create a single, trusted “golden record.”

Melissa G2 Awards

Always recognized and praised

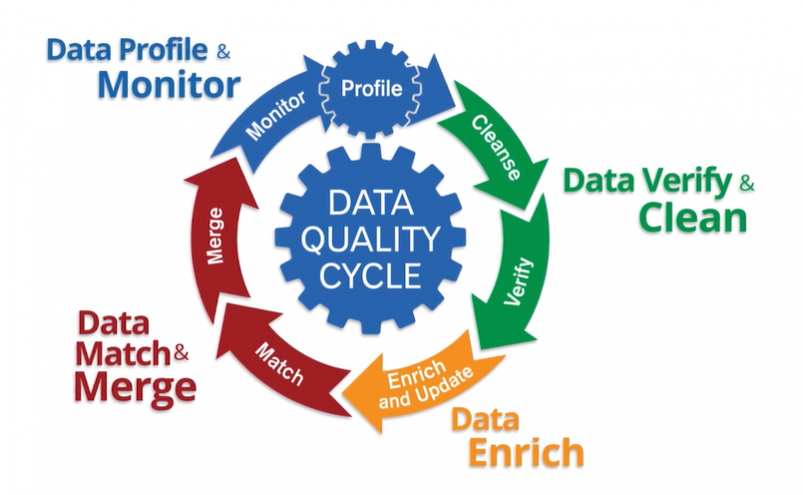

The Data Quality Cycle

Melissa’s Data Quality Tools follow a continuous cycle to ensure your data remains accurate, consistent, and reliable across all systems.

- Profile: Identify data issues and monitor quality across systems.

- Cleanse: Standardise, correct and remove errors for accurate records.

- Verify: Validate addresses, emails, phone numbers and other key data.

- Enrich: Append missing demographic or business information.

- Match: Consolidate duplicates to create a single golden record.

- Apply Rules: Enforce data quality standards across workflows.

- Discover: Analyse trends and monitor ongoing data quality improvements.

The Quick Wins After Using Data Quality Tools

Improved Decision Making

Clean, accurate data empowers teams to make faster, smarter and more confident decisions.

Reduced Costs & Errors

Increased Revenue

Reliable data enables more targeted marketing, accurate forecasting and better pricing strategies.

Time Savings

Automation frees your team from manual data cleansing, validation and correction tasks.

Melissa Data Quality Tools

Address Verification

Verify, correct and standardise addresses in 240+ countries worldwide.

Address Autocomplete

Autocomplete addresses as they are entered to improve data entry speed and accuracy.

Email Verification

Verify an email address in real-time or batch to improve deliverability.

Phone Verification

Confirm UK & international mobile and landline numbers are valid and callable.

Name Verification

Parse and genderise names and identify vulgar or fictitious names at point of entry.

Merge, Purge & Deduplication

Identify and eliminate duplicate records that cost money and prevent a single customer view.

Geocoding

Convert postal addresses to precise latitude-longitude coordinates for improved mapping, visualization, risk assessment, & targeted marketing.

Identity Verification

Utilise a range of industry-leading KYC & AML solutions to meet your business needs.

Unison: All-in-One Data Quality Platform

Unison is Melissa’s on-premises Customer Data Platform that profiles, cleanses, verifies, and monitors your customer data — all without programming. Designed for data stewards, it delivers accurate, consistent and reliable data to power better business decisions.

Simple Visual Interface

Schedule jobs using an easy and intuitive project wizard to automatically run hourly, daily, weekly, monthly or yearly

Intuitive Matching GUI

Powerful fuzzy matching and golden record survivorship with simple built-in rules

Dedupe several files at once, from varying sources & formats: flat file, CSV, SQL, and Oracle

Review and tweak results inside the platform, in real-time

Secure Data Processing

Unmatched security with on premise data management

Control access to financial, medical or other sensitive personal data

Secure local browser access for stewards & users to run data quality regimens

Fast Distributed Performance

Distributed computing handles large data sets & millions of records

Real multi-threading batch performance across the organisation regardless of system or format

Scales to move accurate data through enterprise pipelines in record time.

Built-In Visual Reporting

Process and monitor jobs with robust visual analytics

Robust reporting for stewards & stakeholders

Detailed logging of results with audit trails

Need Help?

Common Questions About Data Quality

What is data quality?

Data quality refers to how accurate, complete, consistent and reliable your data is. High-quality data ensures that businesses can make confident decisions, deliver better customer experiences and stay compliant with regulations.

Why is data quality important?

Poor data quality can lead to costly errors, missed opportunities, and compliance risks. Good data quality improves decision-making, boosts revenue, reduces operational waste and builds customer trust.

How do you improve data quality?

You can improve data quality by profiling your data to detect issues, cleansing and standardising formats, verifying contact information, removing duplicates and enriching records with missing details. Using automated data quality tools makes this process more efficient and scalable.

How do you ensure data quality?

To ensure data quality long-term, businesses should implement data governance policies, automate validation processes and continuously monitor data for errors. Platforms like Melissa Unison help maintain accuracy and consistency across all data sources.

When will an organisation see results from implementing data quality practices?

The timeline for seeing results from implementing data quality practices can vary depending on various factors, including the size of the organisation, the complexity of the data environment, the scope of data quality initiatives, and the existing state of data quality. Generally, organisations can expect to see some initial results within a few months to a year of implementing data quality practices. However, it's important to understand that data quality is an ongoing process, and continuous efforts are needed to maintain and improve data quality over time. Here's a rough timeline for when organisations might start to see results:

- Short-term (First few months):

- Immediate Data Validation: Organisations should see immediate improvements in data accuracy at the point of entry as data validation rules are implemented. This can prevent obvious data errors from entering the system.

- Quick Data Cleansing: If there were glaring data issues during the initial data quality assessment, implementing data cleansing processes should lead to rapid improvements in data quality for specific datasets.

- Medium-term (3 to 6 months):

- Improved Data Profiling: Data profiling over a few months will provide more comprehensive insights into data quality issues and trends, enabling better prioritisation of data quality improvement efforts.

- Enhanced Data Governance: As data governance practices mature, the organisation will have better-defined roles, responsibilities, and processes for managing data quality, leading to increased accountability and better data quality management.

- Long-term (6 months to 1 year):

- Ongoing Data Quality Improvement: Continuous monitoring and improvement of data quality practices will lead to sustained data accuracy and reliability.

- Better Business Insights: As data quality improves, business analytics and decision-making will be based on more accurate and trustworthy data, leading to better insights and outcomes.

- Increased User Trust: As users experience the benefits of improved data quality, they will develop greater trust in the data and be more willing to rely on it for their activities.

- Continuous Improvement (Beyond 1 year):

- Data-Driven Culture: Over time, organisations that prioritise data quality will foster a data-driven culture where data quality practices become ingrained in daily operations and decision-making processes.

- Advanced Data Quality Capabilities: With time and experience, organisations may develop more sophisticated data quality capabilities, such as real-time data monitoring and advanced analytics for detecting and addressing data issues proactively.

It's important to note that data quality is not a one-time effort; it requires ongoing commitment and dedication. Regular data quality assessments, audits, and continuous improvement efforts are necessary to maintain high-quality data and ensure that the organisation continues to derive value from its data assets. As data quality practices become integral to the organisation's operations, the benefits in terms of improved decision-making, operational efficiency, and customer satisfaction become increasingly evident.

On-Prem or Cloud

HIPAA / HITrust & SOC2 Certified

CCPA & GDPR Compliant

99.99% Uptime with SLA

Ready to Start Your Demo?

Start today with Melissa's wide range of Data Quality Solutions, Tools, and Support.